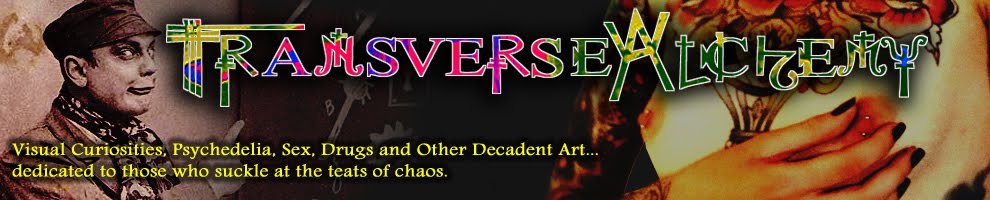

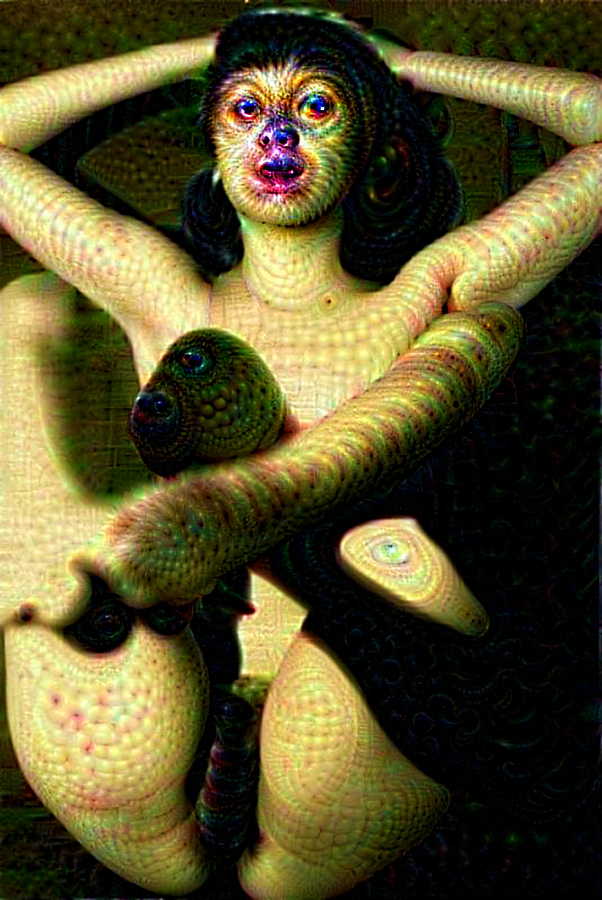

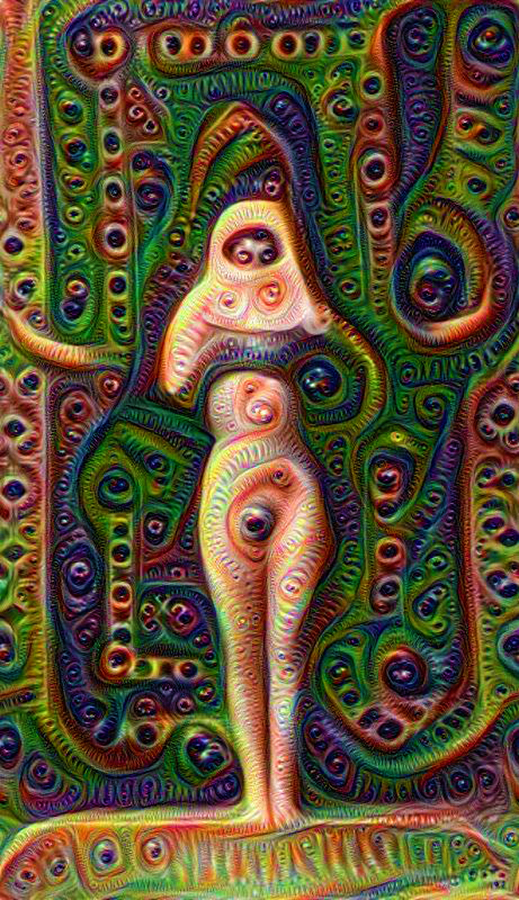

Deep Dream. Because I had to.

So if you haven’t heard of Google’s “Deep Dream” yet, congratulations for finally crawling out from under that rock. Google uses artificial neural networks (ANNs) to discern and process the millions of photos scraped and organized in Google Images.

The artificial neural network is “trained” by showing it millions of examples and gradually adjusting the parameters until it gives the classifications the developers want. A network typically consists of 10-30 stacked layers of artificial neurons. Each image is fed into the input layer, which then talks to the next layer, until eventually the “output” layer is reached. The network’s “answer” comes from this final output layer. For example, the first layer maybe looks for edges or corners. Intermediate layers interpret the basic features to look for overall shapes or components, like a door or a leaf. The final few layers assemble those into complete interpretations—these neurons activate in response to very complex things such as entire buildings or trees.

Google has since released the code for the ANN in an IPython notebook, allowing programmers to make their own “neural network inspired images”. The code is based on Caffe and uses available open source packages, and is designed to have as few dependencies as possible. Since its release, a few web interfaces have sprung up allowing the Python/Caffe inhibited to play with the network on their own. One of these is the Psychic VR lab interface and another is the Dreamscope Dreamer.

Because the computer is undergoing something that humans experience during hallucinations, in which our brains are free to follow the impulse of any recognizable imagery and exaggerate it in a self-reinforcing loop, it can generate it’s own imagery. According to Karl Friston, professor neuroscience at University College London, you could think of a psychedelic experience where “you are free to explore all the sorts of internal high-level hypotheses or predictions about what might have caused sensory input”.

Deep Dream sometimes appears to follow particular rules; these aren’t quite random, but rather a result of the source material. Dogs appear so often likely because there were a preponderance of dogs in the initial batch of imagery, and thus the program is quick to recognize a “dog.” However, edits to the code can produce something similar to “filters” which can change the results in a fairly predictable manner.

Have fun.

Links:

Google Research Blog:“DeepDream – a code example for visualizing Neural Networks”

Psychic VR Lab

Dreamscope

Comments

Deep Dream. Because I had to. — No Comments

HTML tags allowed in your comment: <a href="" title=""> <abbr title=""> <acronym title=""> <b> <blockquote cite=""> <cite> <code> <del datetime=""> <em> <i> <q cite=""> <s> <strike> <strong>